Complete Guide to Setting Up EFK Stack with Kafka, Redis, Beats, and Spring Boot for Microservices Logging

This diagram illustrates a centralized logging system for microservices using the EFK Stack (Elasticsearch, Fluentd, Kibana), Kafka, Redis, and Beats. Here's a brief breakdown of the flow:

Microservices (Spring Boot):

Each microservice generates logs, which are collected by Beats (e.g., Filebeat or Metricbeat).Beats:

Beats agents forward the log data to Kafka.Kafka:

Kafka acts as a buffer and ensures reliable delivery of log messages to the next stage.Redis:

Redis can act as a caching layer or intermediate queue to handle the log flow efficiently.Fluentd:

Fluentd processes, transforms, and enriches log data before forwarding it to Elasticsearch.Elasticsearch:

Stores and indexes the log data for search and analysis.Kibana:

Provides a user-friendly interface for visualizing and analyzing logs from Elasticsearch.

Here’s a step-by-step guide to implementing an end-to-end centralized logging system for Spring Boot microservices using the provided architecture. This includes creating microservices, configuring logging with ELK Stack, Kafka, and Fluentd.

Step 1: Set Up EFK Stack

1.1 Elasticsearch

- Install Elasticsearch locally or use a cloud-hosted version (Elastic Cloud).

- Configure

elasticsearch.ymlfor network binding and authentication.

1.2 Kibana

- Install Kibana and connect it to Elasticsearch in

kibana.yml:

1.3 Fluentd

- Install Fluentd and set up a configuration file (

fluent.conf) to forward logs to Elasticsearch:

1.4 Kafka

- Install Kafka and start Zookeeper and Kafka brokers.

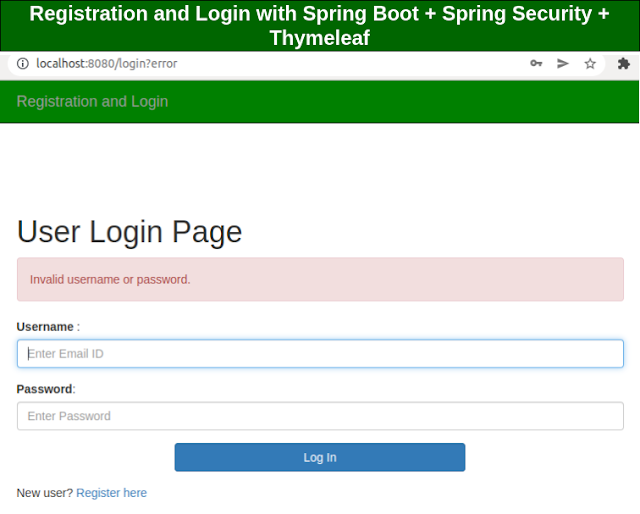

Step 2: Create 3 Spring Boot Microservices

2.1 Common Setup

- Use Spring Initializr (start.spring.io) to create 3 projects:

- Order Service

- Payment Service

- Notification Service

- Add dependencies:

- Spring Boot Starter Web

- Spring Boot Starter Actuator

- Spring Boot Starter Kafka

- Logback (for JSON logging)

2.2 Configure Centralized Logging

Update application.yml for all services:

Add service context in the code:

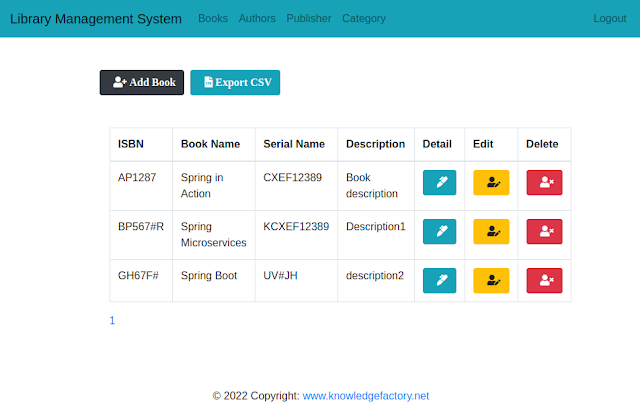

2.3 Create Basic Endpoints

Order Service:

Payment Service:

Notification Service:

Step 3: Kafka Integration

3.1 Add Kafka Configuration

Add Kafka dependencies to pom.xml:

Configure Kafka in application.yml:

3.2 Send Logs to Kafka

In logback-spring.xml, add Kafka Appender:

Step 4: Fluentd Configuration

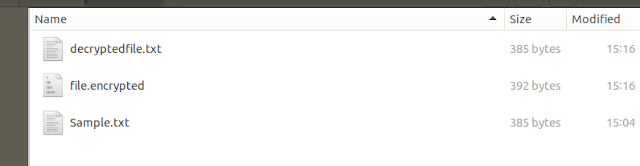

- Configure Fluentd to consume logs from Kafka and send them to Elasticsearch (as shown in Step 1.3).

Step 5: Testing and Verification

- Run All Microservices: Start Order, Payment, and Notification services.

- Log Requests: Make requests to their respective endpoints using Postman or Curl.

- Verify Logs:

- Check Kafka topics for logs using

kafka-console-consumer. - Ensure logs are forwarded to Elasticsearch and visualize them in Kibana.

- Check Kafka topics for logs using

Step 6: Optional Enhancements

- Add a Distributed Tracing System (e.g., Spring Cloud Sleuth + Zipkin) for better observability.

- Include Log Enrichment with metadata (e.g., request ID, trace ID).

- Deploy all components using Docker Compose or Kubernetes for production readiness.

This implementation sets up a complete, scalable logging solution for your Spring Boot microservices.

When to Use This Architecture

High Traffic Microservices: Ideal for large systems with many microservices generating high log volumes.

Real-time Monitoring: Needed for real-time log analysis and quick issue detection.

Scalable Infrastructure: Useful when the system grows and requires scalable logging.

Distributed Systems: Aggregates logs from multiple microservices for easier debugging.

Structured Logs: Efficient when logs are in structured formats (e.g., JSON) that need fast querying.

Improved Debugging: Helps trace requests across services for distributed debugging.

When NOT to Use This Architecture

Small Applications: Overkill for small or monolithic apps.

Low Log Volume: Unnecessary for applications with minimal log traffic.

Short-term Projects: Not needed for prototypes or projects without scalability demands.

Limited Resources: Too resource-intensive for teams with limited capacity.

Simple Debugging: Unnecessary for apps with basic logging needs.

Buy Now – Unlock Your Microservices Mastery for Only $9!

Get your copy now for just $9! and start building resilient and scalable microservices with the help of Microservices with Spring Boot 3 and Spring Cloud.